Why AI productivity feels like a trap (and what to do about it)

Six hidden patterns that drive the tension between efficiency and capability

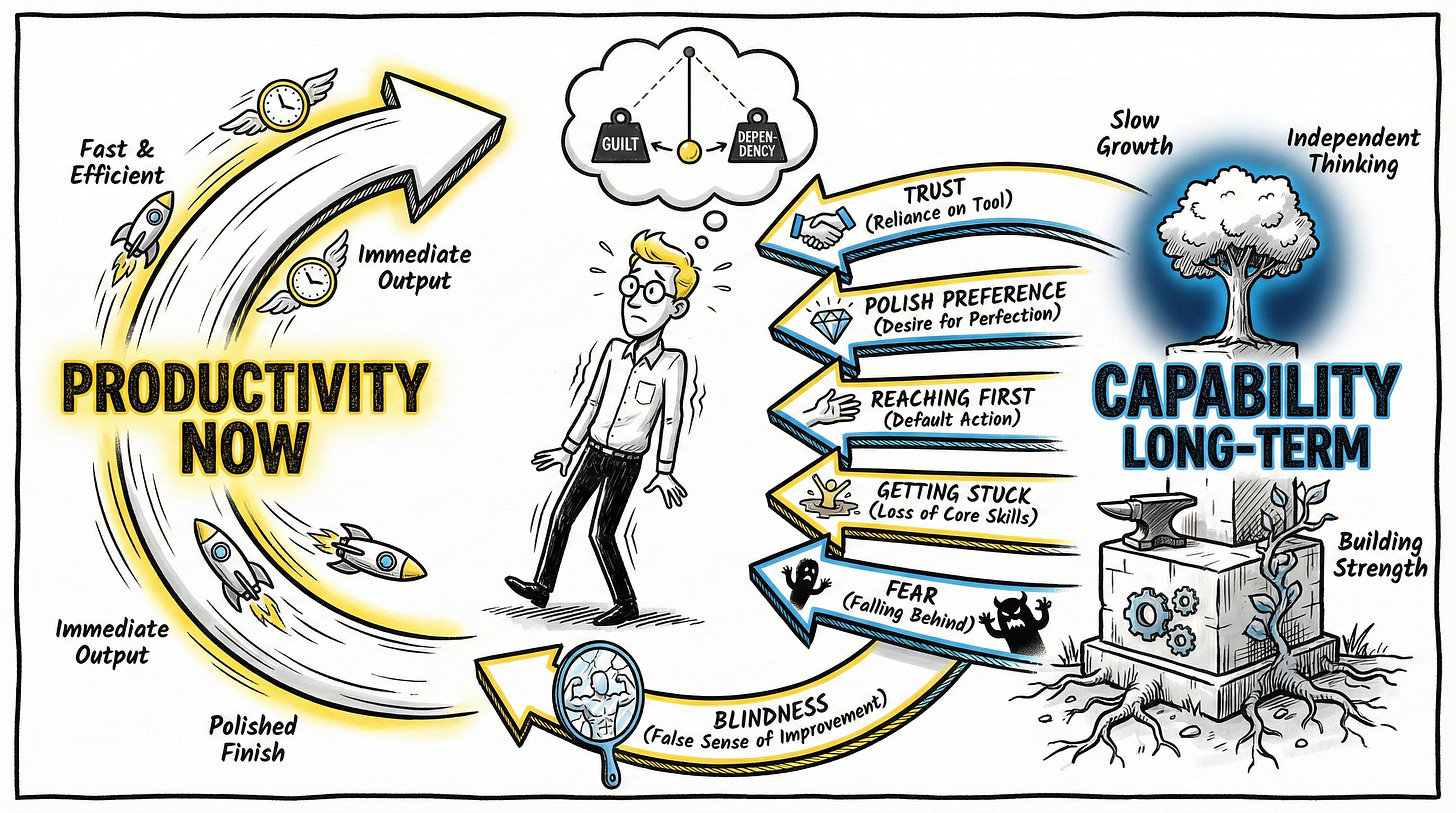

Key Points:

AI creates a daily tension: productivity now versus capability long-term

Five patterns drive you toward AI: trusting its answers too much, preferring polished output over rough thinking, reaching for AI before trying yourself, getting stuck in AI’s suggestions, and fearing you’ll fall behind

One pattern keeps you blind: the false sense that using AI makes YOU more competent, when the AI is doing the thinking

The Productivity-Capability Trap

Last issue I said your professional value lives in creative agency, being in the driver’s seat of your work, not the passenger seat. This issue I promised to expand on what it means to maintain that agency.

But as I researched this, I discovered something. The discomfort many professionals experience with AI isn’t one thing. It shows up differently.

You might experience:

Work that feels less yours – like you’ve become someone who arranges AI’s ideas rather than creates your own

Growth that’s stopped – productivity went up but your skills stayed flat

Uncertain contribution – you can’t confidently defend which parts of your AI-assisted work are yours

Anxiety about keeping up – pressure to use AI more but you’re not sure how to use it well

Worry about long-term effects – you’re productive with AI now but concerned about where your thinking will be in two years

One framework that might help make sense of some of this is Self-Determination Theory. It suggests we need three things to feel satisfied in our work: autonomy (ownership, feeling of control), competence (growth, getting better), and relatedness (meaningful contribution, knowing your work matters).

When AI does too much of your cognitive work, it can violate these needs. Work feels like arranging someone else’s ideas (autonomy violation). You’re not growing (competence violation). You’re uncertain if your contribution matters (relatedness violation).

But SDT doesn’t explain everything on that list. Anxiety about keeping up? That’s different, social pressure, competition, fear of missing out. Worry about long-term effects? That’s concern about problems that haven’t happened yet.

What I’m developing is a framework to address both. Here’s what I’m learning.

The Core Tension

Here’s the tension: AI makes you more productive. You can ship more work, faster, with better polish. But that efficiency may come at a cost. When you use AI for thinking you could do yourself, you’re not exercising those mental capabilities. And like muscles, though the brain works differently, capabilities you don’t use may weaken over time.

So you face a choice on every task: Do I think through this myself (slower, harder, but I stay strong)? Or do I use AI (faster, easier, but am I getting weaker)?

Most of us swing between guilt and dependency. We use AI, feel guilty about it, promise to think more independently tomorrow, then use AI again because we need to keep up.

Hidden Patterns That Drive You Toward AI

Understanding these patterns, which overlap and reinforce each other, helps you recognize when they’re making the choice for you.

1. Trusting AI Too Much (similar to Automation Bias)

You trust AI suggestions over your own judgment. You ask AI for analysis, get a confident answer, and accept it without checking it against your own thinking.

Example: You’re writing a strategy memo. AI suggests a framework. It sounds good, so you use it. But you never verified if it fits your client’s actual situation. Research suggests a majority of people accept AI suggestions without independently verifying them.

2. Preferring Polished Output (Fluency Bias)

You judge something as better because it sounds smooth and polished. AI’s output is grammatically perfect, well-structured, confident. Your rough thinking feels messy and uncertain by comparison.

Example: You draft an email. It’s clear but not polished. AI rewrites it beautifully. You think “this is better”. But was the original message actually worse, or did polish fool you into thinking so?

3. Reaching for AI First (Cognitive Offloading)

You reach for external help instead of using your internal thinking. Each time you ask AI before trying to think yourself, you skip the mental exercise.

Example: You face a problem. Your first instinct is “let me ask AI”. But you never gave your brain a chance to work through it. Over time, this reflex may weaken your ability to solve problems independently.I

4. Getting Stuck in AI’s Frame (Anchoring Effect)

AI’s first output is so polished you get locked into its framing. You stop exploring your own ideas because they feel inferior by comparison.

Example: You ask AI to brainstorm solutions to a client problem. It gives you three great options. You evaluate those three, but you never generate your own alternatives. AI’s frame became your frame, and you lost the chance to think beyond it.

5. Fearing You’ll Fall Behind (Loss Aversion)

Your fear of falling behind drives you to overuse AI. “I NEED AI to keep up” becomes the justification for every shortcut. The hype around AI combined with social media’s information bubble effect makes it harder to see the truth.

Example: Your competitor ships faster using AI. You panic. You start using AI for everything, even thinking you used to do yourself. Fear of losing competitive position drives the choice.

The Pattern That Keeps You Blind

Here’s what makes this tension so hard to recognize:

6. The False Sense of Improvement

Using AI makes you FEEL more competent. You’re shipping better work, getting praise, solving harder problems. But the AI is doing the thinking, you might not actually be getting better. Research on automation and skill development suggests this creates what some call “automation complacency”, you feel capable because the system performs well, not because your skills improved.

Example: You use AI to write code for six months. You feel like you’re learning faster, becoming more capable. But when AI goes down for a day, you realize you can’t write the same quality code yourself. You thought you were developing expertise, but you might have been developing dependency.

This is perhaps the most concerning pattern because it prevents you from seeing the other five. When you feel competent, you don’t question whether you’re trusting AI too much, preferring polish over substance, reaching for it reflexively, getting stuck in its frame, or being driven by fear. The false sense of growth can mask the reality of capability erosion.

How These Patterns Work Together

These six patterns, which are interconnected, not independent, create a reinforcing cycle that’s hard to escape:

You trust AI, so you reach for it first. Reaching for it first becomes a habit. Its polished output locks your thinking into its frame. You can’t think beyond what AI suggested. You feel productive, which creates a false sense that you’re improving. That feeling prevents you from noticing your independent capability may be weakening. Fear of falling behind without AI keeps you trapped.

Each pattern strengthens the others. The more you trust AI, the more you reach for it. The more you reach for it, the less you exercise independent thinking. The weaker that muscle, the more you depend on AI. The false sense of improvement blinds you to this cycle. Fear keeps you from breaking it.

The result: You’re more productive but may be less capable. Your work is good but doesn’t feel yours. You’re keeping up but potentially getting weaker, and you might not realize it until it’s too late.

Creative Agency: The Answer

This is where creative agency matters. Remember the chef from last issue facing the food replicator?

His value wasn’t avoiding the replicator. His value was knowing when to use it and when not to:

Judgment about when - Is this a special anniversary dinner (cook from scratch) or quick Tuesday lunch (replicator for prep)?

The skills to deploy - When cooking matters, he draws on experience, reasoning, trained judgment

Taking responsibility - He puts his name on the meal either way

Creative agency isn’t the skills themselves. It’s the position you occupy as the one making decisions, knowing when to deploy your skills versus when to delegate, and taking responsibility for those choices.

But to make those decisions, you need to recognize these six patterns in action. Catch yourself before you trust AI without verifying. Question whether polished output is actually better. Notice when you’re reaching for AI instead of thinking. Resist getting locked into AI’s frame, generate your own alternatives. Recognize when fear is driving the choice. And most importantly, question whether you’re actually getting better or just producing better output with AI’s help.

What Comes Next

I’m developing a framework to navigate this tension. Three phases:

RECOGNIZE (this issue) - Understanding how AI may erode capability through these six patterns.

RESTORE (next five issues) - Building five core capabilities that make you powerful whether you use AI or not:

Thinking grounded in real experience (Embodiment),

Reasoning from first principles (Reasoning),

Judging quality of thinking (Metacognition),

Learning through struggle (Learning),

Developing your unique voice (Originality).

These aren’t just defenses against AI overuse. They’re what makes you use AI effectively while staying in the driver’s seat. Strengthen these five, and you’re prepared for whatever AI becomes.

AMPLIFY (future issues) - Working WITH AI skillfully to multiply your impact while preserving what makes you valuable.

Next issue, I’ll introduce the first dimension: your ability to reason from first principles. Once you can name what matters, you can strengthen it deliberately.

Try This

Exercise: Catch Yourself in the Patterns

This week, notice when you reach for AI. Before you do, ask:

Trust check: Am I about to accept AI’s answer without verifying it against my own thinking?

Polish check: Am I choosing AI because the output sounds better, or actually is better?

Reflex check: Could I think through this myself first, even just for five minutes?

Frame check: If I use AI, will I generate my own alternatives too, or just evaluate what it gives me?

Fear check: Am I reaching for AI because I’m afraid of falling behind?

Improvement check: Am I using AI so much that I can’t tell if I’m getting better or just getting better output?

Just notice. Don’t judge yourself. Awareness is the first step.

A Final Thought

P.S. This framework: RECOGNIZE → RESTORE → AMPLIFY is foundational to The Augmented Mind book I’m writing, a guide to preserving what makes you valuable as AI gets better.

If you derive meaning and identity from your intellectual work and want to maintain intellectual ownership while using AI strategically, join me in the journey.

Sources & Further Reading:

Microsoft Research (2024). The Metacognitive Demands of Generative AI. Study showing AI systems impose constant metacognitive demands—requiring users to monitor and control output quality with every interaction.

MIT Media Lab (2025). Your Brain on ChatGPT: Cognitive Debt. EEG study revealing AI use reduces brain connectivity during writing tasks, impairs learning, and weakens recall.

Research study (2024). Performance and Metacognition Disconnect in AI Interaction. Study showing users overestimate their performance by more than AI actually improves it. Nearly 60% of participants blindly trusted AI outputs without verification.

Harvard Business School (2023). Navigating the Jagged Technological Frontier. BCG study with 758 consultants showing 12-40% productivity gains inside AI’s capability frontier, but 19% worse performance outside it.

Julia Kołodko (2025). AI and Meaningful Work. Applies self-determination theory to AI automation, examining how removing meaningful tasks violates core psychological needs.